“WebQuerySave” / “PostOpen” and all its siblings have been a bastion of Domino and Notes developments since time out of mind and indeed they exist in a near identical form in Salesforce but just called Triggers

Just like Notes/Domino has different events that let code ‘Do Stuff’ to records e.g. “WebQueryOpen”,”OnLoad”, “WebQuerySave” etc etc, Salesforce has the same sort of thing, in their case they are broken down into 2 parts: Timings and Events

Timings: Before and After

Before: The Event has been started but the record has not been saved, this maps basically to the “Query” events in Domino.

If you want to calculate fields and stuff and change values in the record you are saving, this is the time to do that, you don’t have to tell it to save or commit the records as you normally would, it will run a save after your code is run.

After: The record has been saved, all field values have been calculated, then the After event is run.

If you want to update other objects on the basis of this record being created/saved do it here, you can’t edit the record you are saving, but lots of useful bits such as the record id and who saved it are available in the After event {{You know that pain in the butt thing you sometimes have to do with Domino when you have to use the NoteID rather than that Document ID before a document is saved this gets round that issue.}}

Events: Insert, Update, Delete and Undelete

These are exactly what they say there, Insert is like a new document creation, Update is editing an existing document, etc etc

This then gives us a total set of different event types of:

- before insert

- before update

- before delete

- after insert

- after update

- after delete

- after undelete{{Yes eagle eyes, there is no “before undelete”.}}

Now you can have a separate trigger for each of these events, but I have found that this bites you in the bum when they start to argue with each other and hard to keep straight when things get complex, so I just tend to have one trigger for all events and a bit of logic in it to determine what’s going to happen when

Here is my Basic template I start with on all my triggers

trigger XXXXTriggerAllEvents on XXXX (

before insert,

before update,

before delete,

after insert,

after update,

after delete,

after undelete) {

if(Trigger.isInsert || Trigger.isUpdate) {

if (Trigger.isUpdate && Trigger.isAfter) {

MYScriptLibarary.DoStuffAfterAnUpdate(Trigger.New, Trigger.OldMap);

} else if (Trigger.isInsert) {

//Do some stuff here to do with when a new document being create, like sending emals

}

}

}

As you can see you can determine what event you are dealing with by testing for “.isInsert” or “.isAfter” and then run the right bit of code for what you want, again I like to keep everything in easy sight, so use functions when ever I can with nice easy to understand names.

In the above case, I want to check a field after there has been an update to see if it has been changed from empty to containing a value. you can do this with the very very useful ‘Trigger.New’ and ‘Trigger.OldMap’) as you can see below

public with sharing class MYScriptLibarary { public static void DoStuffAfterAnUpdate(List<XXXX> newXXXX, Map<ID, XXXX> oldXXXX) { for (XXXX curentXXXX : newXXXX) { if(!String.isBlank(curentXXXX.MyField) && String.isBlank(oldXXXX.get(curentXXXX.Id).MyField) ) { system.debug('OMG!!! MYField changed DO SOMTHING'); } } } }

So we are taking the list of objects{{You are best to handle to handle all code in terms of batches rather than the single document you are used to in Domino, we will handle batching in a later blog, but just take my word for it at the moment}} that have caused the trigger to run ie “Trigger.New”, looping through them and comparing them to the values in the Trigger.OldMap (which contain the old values) to see if things have changed.

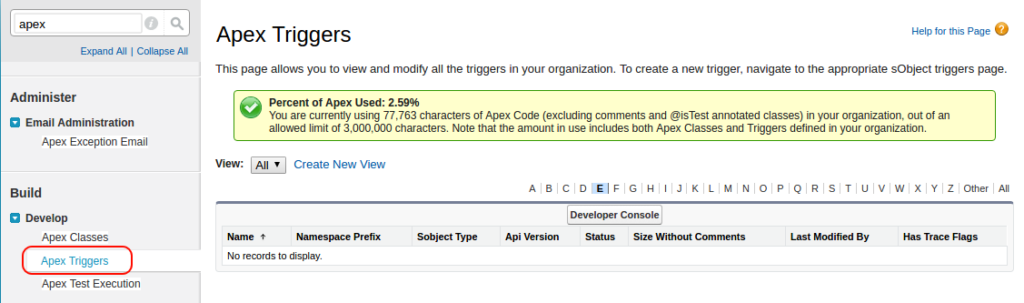

So that is the theory over, you can see existing triggers by entering Setup and searching for “apex triggers”

BUT you cant make them from there, you make them from the Object you want them to act on.

Lets take the Case object for an example

In setup you search for case, and click on “Case Triggers” and then on “New”

That will give you the default trigger…. lets swap that out for the all events trigger I showed above

Better, then just click save and your trigger will be live. simples..

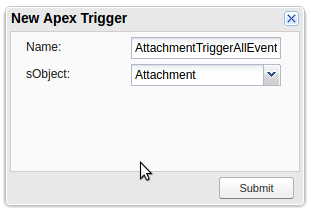

Now there is an alternative way to make triggers, and you do sometime have to use it when you want to create a trigger for an object that does not live in the setup, such as the attachment object.

You will first need to open the Developer Console up (Select your Name in the top right and select “Developer Console”), then select File –> New –> Apex Trigger

Select “attachment” as the sObject and give it a sensible name.

And now you can do a trigger against an object that normally you don’t see.

Final Notes:

1. Salesforce Process flows can fight with your triggers, if you get “A flow trigger failed to Execute” all of a sudden, go look to see if your power users have been playing with the process flows.

2. Make sure you have security set correctly, particularly with community users, both security profiles and sharing settings can screw with your triggers if you cant write or see fields.

3. As always make sure you code works if there are millions of records in Salesforce. CODE TO CATER TO LIMITS.